By: Ben Lykins, RPT Solutions Architect

Introduction

The following will walk through the necessary steps to deploy NueVector via Helm. This can be done locally or on a virtual machine. I am using minikube to test on, but K3S/MicroK8s or any other distros will work. Since this is going to be scaled down, we will also limit replicas. The purpose of this guide is for testing and not intended for any production usage. Consult the official documentation for more information : SUSE NeuVector Docs.

What is SUSE NeuVector?

SUSE NeuVector, the leader in Full Lifecycle Container Security, delivers uncompromising end-to-end security for modern container infrastructures. SUSE NeuVector offers a cloud-native Kubernetes security platform with end-to-end vulnerability management, automated CI/CD pipeline security, and complete run-time security, including the industry’s only container firewall to block zero days and other threats.

What is Multipass?

Multipass is a tool to generate cloud-style Ubuntu VMs quickly on Linux, macOS, and Windows.It gives you a simple but powerful CLI that allows you to quickly access an Ubuntu command line or create your own local mini-cloud. Developers can use Multipass to prototype cloud deployments and to create fresh, customized Linux dev environments on any machine. Mac and Windows users can use Multipass as the quickest way to get an Ubuntu command line on their system. New Ubuntu users can use it as a sandbox to try new things without affecting their host machine, and without the need to dual boot.

Prerequisites

Required:

- A local or virtual machine with a K8S distro for testing.

- I will use

multipass, which can launch an instance with minikube already installed.

- I will use

Set up Virtual Machine

Since I have multipass installed, I will launch a new vm using the existing minikube image.

Run:

multipass launch -c 8 -m 16G -n demo minikubeOnce completed, you should get a launched.

multipass launch -c 8 -m 16G -n demo minikube

Launched: demoRunning a multipass list, will output all the launched virtual machines.

demo Running 192.168.64.20 Ubuntu 22.04 LTS

172.17.0.1

192.168.49.1NeuVector Setup

Connect to the virtual machine, you will run multipass shell demo.

Following is an example when you shell into the VM:

Welcome to Ubuntu 22.04.3 LTS (GNU/Linux 5.15.0-92-generic aarch64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Thu Feb 29 09:16:40 EST 2024

System load: 1.5546875

Usage of /: 13.2% of 38.59GB

Memory usage: 6%

Swap usage: 0%

Processes: 199

Users logged in: 0

IPv4 address for br-1746f5f95e03: 192.168.49.1

IPv4 address for docker0: 172.17.0.1

IPv4 address for enp0s1: 192.168.64.20

IPv6 address for enp0s1: fd3c:28b:5cc5:4064:5054:ff:fe87:5beNeuVector Setup – minikube

minikube is already started on the new instance; however, I am going to bump up CPUs and Memory for it.

If needing to install minikube, check out the documentation.

First, stop minikube:

Run:

minikube stop

Example Output:

ubuntu@demo:~$ minikube stop

✋ Stopping node "minikube" ...

???? Powering off "minikube" via SSH ...

???? 1 node stopped.Update CPUs:

Run:

minikube config set cpus 4

Example Output:

ubuntu@demo:~$ minikube config set cpus 4

❗ These changes will take effect upon a minikube delete and then a minikube startUpdate Memory:

Run:

minikube config set memory 8192

Example Output:

ubuntu@demo:~$ minikube config set memory 8192

❗ These changes will take effect upon a minikube delete and then a minikube startDelete exiting minikube:

In order for the configuration changes to be made, minikube needs to be deleted and recreated.

Run:

minikube delete

Example Output:

ubuntu@demo:~$ minikube delete

???? Deleting "minikube" in docker ...

???? Deleting container "minikube" ...

???? Removing /home/ubuntu/.minikube/machines/minikube ...

???? Removed all traces of the "minikube" cluster.Run:

minikube start

Example Output:

ubuntu@demo:~$ minikube start

???? minikube v1.32.0 on Ubuntu 22.04 (arm64)

✨ Automatically selected the docker driver. Other choices: ssh, none

???? Using Docker driver with root privileges

???? Starting control plane node minikube in cluster minikube

???? Pulling base image ...

???? Creating docker container (CPUs=4, Memory=8192MB) ...

???? Preparing Kubernetes v1.28.3 on Docker 24.0.7 ...

▪ Generating certificates and keys ...

▪ Booting up control plane ...

▪ Configuring RBAC rules ...

???? Configuring bridge CNI (Container Networking Interface) ...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

???? Verifying Kubernetes components...

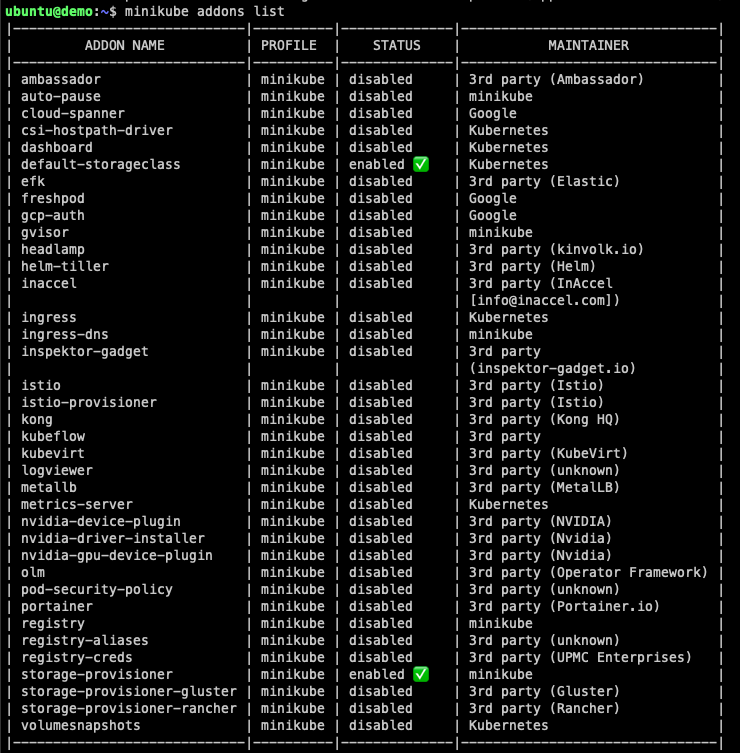

???? Enabled addons: storage-provisioner, default-storageclass

???? Done! kubectl is now configured to use "minikube" cluster and "default" namespace by defaultminikube should be up running, once connected. Check its status by doing the following.

Run:

minikube status

Example Output:

ubuntu@demo:~$ minikube status

minikube

type: Control Plane

host: Running

kubelet: Running

apiserver: Running

kubeconfig: ConfiguredIf looking to play with minikube more, there are additional add-ons which can be installed, in this case, we will leave the defaults, but metrics-server and dashboard are typical.

NeuVector Setup – kubectl

This image also comes with kubectl setup:

ubuntu@demo:~$ kubectl version

Client Version: v1.28.7

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: v1.28.3NeuVector Setup – helm

Helm is not installed, but can be quickly set up:

ubuntu@demo:~$ helm version

Command 'helm' not found, but can be installed with:

sudo snap install helmTo install, run:

sudo snap install helm --classic

Example Output:

ubuntu@demo:~$ sudo snap install helm --classic

Download snap "core22" (1125) from channel "stable"Once install is complete, you can check the version with helm version:

ubuntu@demo:~$ helm version

version.BuildInfo{Version:"v3.14.2", GitCommit:"c309b6f0ff63856811846ce18f3bdc93d2b4d54b", GitTreeState:"clean", GoVersion:"go1.21.7"}NeuVector Setup – Helm Install

Add the helm repo, run:

helm repo add neuvector https://neuvector.github.io/neuvector-helm/For this, I’m going to use the latest version, but other older versions and development version can be listed:

helm search repo neuvector --devel -lWhen this was originally written, the latest as of 29 February 2024 — Leap Day!:

ubuntu@demo:~$ helm search repo neuvector

NAME CHART VERSION APP VERSION DESCRIPTION

neuvector/core 2.7.3 5.3.0 Helm chart for NeuVector's core services

neuvector/crd 2.7.3 5.3.0 Helm chart for NeuVector's CRD services

neuvector/monitor 2.7.3 5.3.0 Helm chart for NeuVector monitor servicesHelm Install:

For setting up NeuVector, it is simple enough that I will keep most of the default values. I am updating the controller and scanner replicas, if leaving the defaults it will nuke your system since minikube is running a single node. This is fine for local and development environments, run the following:

helm upgrade --install neuvector neuvector/core --version 2.7.6 \

--set tag=5.3.2 \

--set controller.replicas=1 \

--set cve.scanner.replicas=1 \

--create-namespace \

--namespace neuvectorThe readme for the repository will provide additional configuration options:

When running:

ubuntu@demo:~$ helm upgrade --install neuvector neuvector/core --version 2.7.6 \

--set tag=5.3.2 \

--set controller.replicas=1 \

--set cve.scanner.replicas=1 \

--create-namespace \

--namespace neuvector

Release "neuvector" does not exist. Installing it now.

NAME: neuvector

LAST DEPLOYED: Thu Feb 29 09:34:30 2024

NAMESPACE: neuvector

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Get the NeuVector URL by running these commands:

NODE_PORT=$(kubectl get --namespace neuvector -o jsonpath="{.spec.ports[0].nodePort}" services neuvector-service-webui)

NODE_IP=$(kubectl get nodes --namespace neuvector -o jsonpath="{.items[0].status.addresses[0].address}")

echo https://$NODE_IP:$NODE_PORTAfter running helm to install NeuVector, it will take some time for all of the nodes to come up and be stable. When all the pods are up and running and stable, then we should be good to try connecting.

Run:

kubectl get pods -n neuvector

Example Output:

NAME READY STATUS RESTARTS AGE

neuvector-controller-pod-554d868cbd-4sk54 1/1 Running 0 3m15s

neuvector-enforcer-pod-gqhsv 1/1 Running 2 (63s ago) 3m15s

neuvector-manager-pod-8589675984-7pl2j 1/1 Running 0 3m15s

neuvector-scanner-pod-5bb668cc99-r7vkq 1/1 Running 0 3m15Accessing the NeuVector User Interface

I am going to port-forward this and access it from my local browser. On the virtual machine, run the following command.

kubectl port-forward --address 0.0.0.0 --namespace neuvector service/neuvector-service-webui 8443

Example Output:

ubuntu@demo:~$ kubectl port-forward --address 0.0.0.0 --namespace neuvector service/neuvector-service-webui 8443

Forwarding from 0.0.0.0:8443 -> 8443This will listen on port 8443 on all addresses (0.0.0.0) and forward to the service : neuvector-service-webui.

Accessing Locally

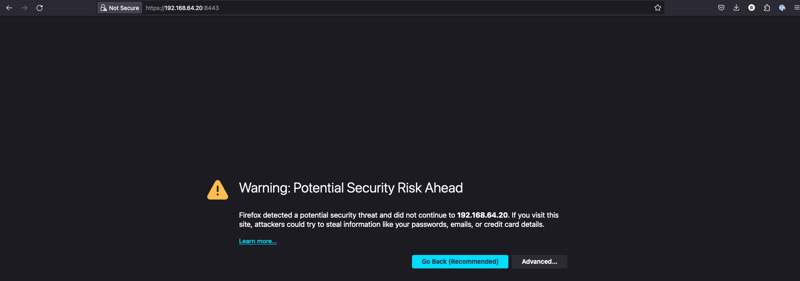

On you local browser, go to the following, https://ipaddress:8443.

Please Note: the IP Address I pulled is the virtual machine’s private IP address. This can be checked again using

multipass list.

multipass list

Example Output:

Name State IPv4 Image

demo Running 192.168.64.20 Ubuntu 24.04 LTS

172.17.0.1

192.168.49.1Since this is a self-signed certificate, you can ignore the warnings and proceed.

By default, username and password are admin:admin.

Check off on the EULA and you can login.

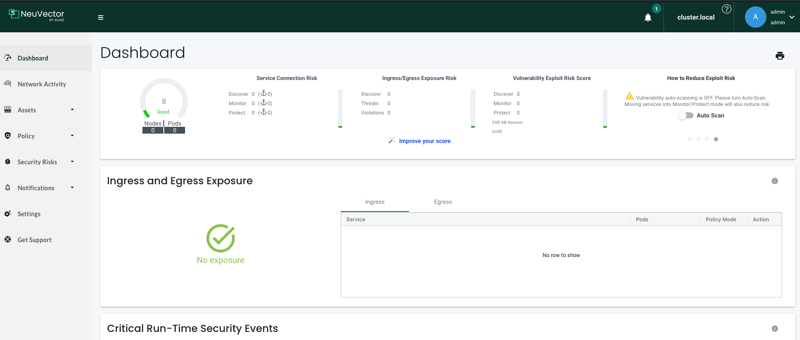

And voila, update admin password if you plan will continue to use this and you are done.

Additional Steps – Set up mysql container

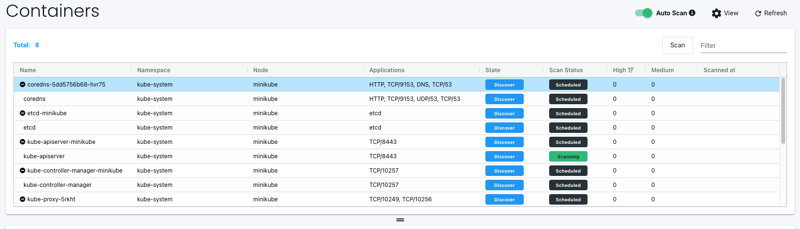

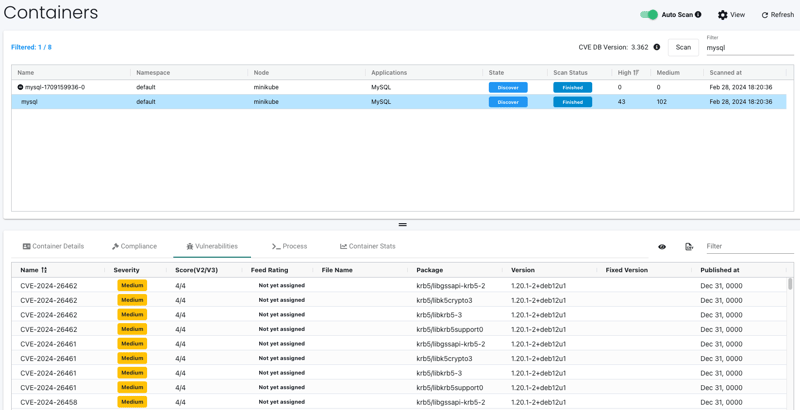

If looking to test NeuVector a bit more, we will add a MySQL service and run scans on containers and nodes with the NeuVector console.

Add the bitnami repo:

helm repo add bitnami https://charts.bitnami.com/bitnamiInstall:

helm install bitnami/mysql --generate-nameIn NeuVector Interface

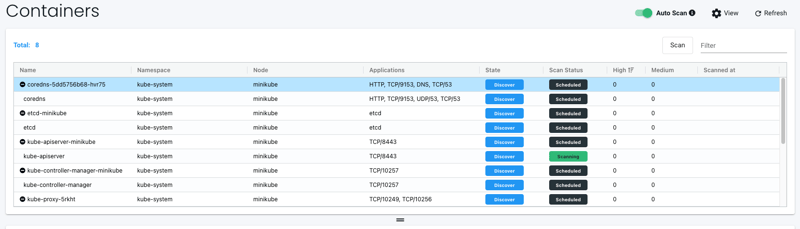

Go to Assets in the navigation pane on the left and select the dropdown. From the dropdown, select containers.

Turn on Auto Scan or perform a manual scan:

Auto Scanning:

Scans will schedule and return back results on completed. Depending on the amount of resources, both scanners and containers, it could take time. Since this is a new cluster, it is relatively quick.

You can filter and view the vulnerabilities which are found:

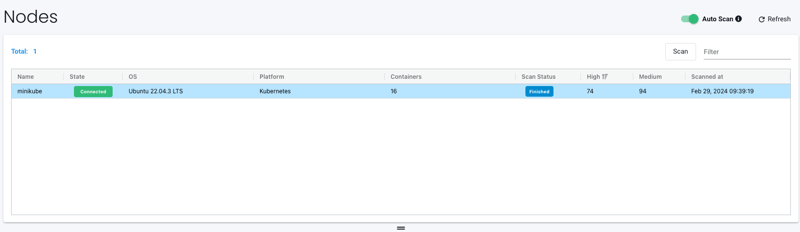

Go to the Nodes page:

You can see the nodes are also scanned as well for vulnerabilities.

Conclusion

That is about it, a quick and easy way to test out NeuVector. This is really just scratching the surface when it comes to what features and solutions it offers.

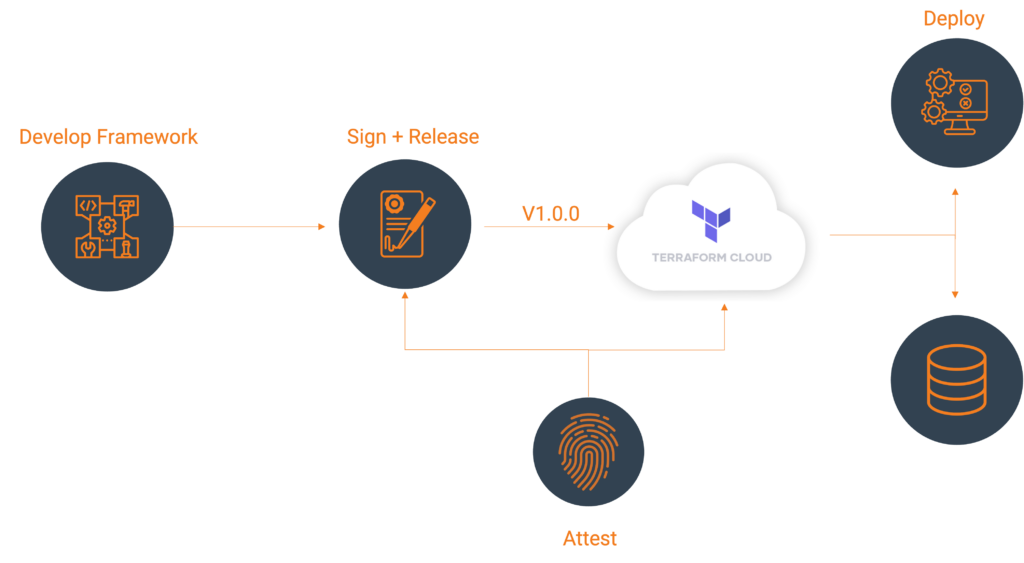

HashiCorp’s Terraform Cloud provides a centralized platform for managing infrastructure as code. It’s a leading provider in remote Terraform management with remote state management, automated VCS integrations, and cost visibility. One of its features, a private registry, can be used to develop internal Terraform providers where control, security, and customizations are paramount.

Let’s explore an example using the Terraform Provider Scaffolding Framework to build a custom Terraform provider and publish it to a private registry. Scaffold provides a framework starter kit that you can use out of the box to replace your APIs.

Signing Your Provider

Code signing guarantees that the generated artifacts originate from your source, allowing users to verify this authenticity by comparing the produced signature with your publicly available signing key. It will require you to generate a key pair through the GNU PGP utility. You can develop this by using the command below, be sure to replace GPG_PASSWORD and your name with values that make sense.

gpg --default-new-key-algo rsa4096 --batch --passphrase "${GPG_PASSWORD}" --quick-gen-key 'Your Name <[email protected]>' default defaultExport Public Key

With your newly generated key securely stored, the next step involves exporting and uploading it to Terraform Cloud. This action facilitates verification while deploying your signed artifacts, and ensuring their authenticity within the platform’s environment. The GPG Key API requires the public key to validate the signature. To access the list of key IDs, you can execute: gpg --list-secret-keys --keyid-format LONG. The key is denoted in the output.

[keyboxd]

---------

sec rsa4096/<KEY ID> 2023-11-22 [SC] [expires: 2026-11-21]You can then get your public key as a single string. KEY=$(gpg --armor --export ${KEY_ID} | awk '{printf "%sn", $0}'). You’ll then need to build a payload with the output of that file and POST that to https://app.terraform.io/api/registry/private/v2/gpg-keys. The ORG_NAME is your Terraform cloud organization.

{

"data": {

"type": "gpg-keys",

"attributes": {

"namespace": "${ORG_NAME}",

"ascii-armor": "${KEY}"

}

}

}Export Private Key for CI/CD (Optional)

If you plan to use this key in a CI Platform, you can also export the key and upload it gpg --export-secret-keys --armor ${KEY_ID} > /tmp/gpg.pgp to a secure Vault.

Packaging Terraform Providers with GoReleaser

GoReleaser simplifies the process of building and releasing Go binaries. Using GoReleaser, we can bundle different architectures, operating systems, etc.

You will need to create a terraform registry manifest, the protocol version is essential. If you are using Plugin Framework, you will want version 6.0. If you are using Plugin SDKv2, you will want version 5.0.

{

"version": 1,

"metadata": {

"protocol_versions": ["6.0"]

}

}Configuring GoReleaser

Ensure your goreleaser.yml configuration includes settings for multi-architecture support and signing. This file should live at the provider’s root, next to your main codebase.

before:

hooks:

- go mod tidy

builds:

- env:

- CGO_ENABLED=0

mod_timestamp: '{{ .CommitTimestamp }}'

flags:

- -trimpath

ldflags:

- '-s -w -X main.version={{ .Version }} -X main.commit={{ .Commit }}'

goos:

- freebsd

- windows

- linux

- darwin

goarch:

- amd64

- '386'

- arm

- arm64

ignore:

- goos: darwin

goarch: '386'

binary: '{{ .ProjectName }}_v{{ .Version }}'

archives:

- format: zip

name_template: '{{ .ProjectName }}_{{ .Version }}_{{ .Os }}_{{ .Arch }}'

checksum:

extra_files:

- glob: 'terraform-registry-manifest.json'

name_template: '{{ .ProjectName }}_{{ .Version }}_manifest.json'

name_template: '{{ .ProjectName }}_{{ .Version }}_SHA256SUMS'

algorithm: sha256

signs:

- artifacts: checksum

args:

- "--batch"

- "--local-user"

- "{{ .Env.GPG_FINGERPRINT }}"

- "--output"

- "${signature}"

- "--detach-sign"

- "${artifact}"

stdin: '{{ .Env.GPG_PASSWORD }}'

release:

extra_files:

- glob: 'terraform-registry-manifest.json'

name_template: '{{ .ProjectName }}_{{ .Version }}_manifest.json'

changelog:

skip: trueTag your Branch

git tag 0.0.1

git checkout 0.0.1Your git strategy may differ, but GoReleaser uses branch tags to determine versions.

Bundle and Sign Binaries

Execute GoReleaser to bundle the binaries locally without publishing. We skipped publishing as we will manually upload them to Terraform Cloud.

export GPG_TTY=$(tty)

export GPG_FINGERPRINT=${KEY_ID}

goreleaser release --skip=publish

Now we have our artifacts.

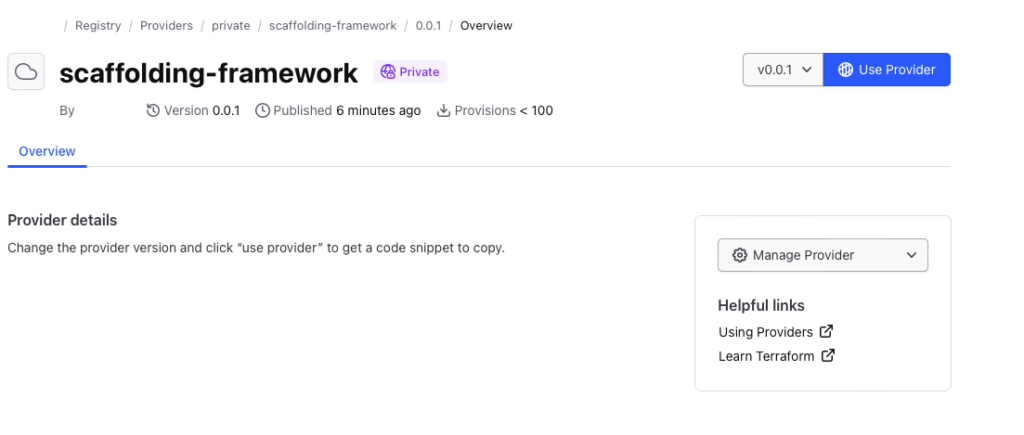

Publishing to Terraform Cloud Private Registry

Once you have the signed binaries, you can publish them to the Terraform Cloud private registry. HashiCorp provides a guide, which we will follow.

Register the provider (first time only)

Create a provider config file and POST that body utilizing your Terraform Cloud API token. A provider name is usually a singular descriptor representing a business unit, such as Google or AWS.

curl --header "Authorization: Bearer ${TERRAFORM_CLOUD_API_TOKEN}"

--header "Content-Type: application/vnd.api+json"

--request POST

-d @-

"https://app.terraform.io/api/v2/organizations/${ORG_NAME}/registry-providers" <<EOT

{

"data": {

"type": "registry-providers",

"attributes": {

"name": "${PROVIDER_NAME}",

"namespace": "${ORG_NAME}",

"registry-name": "private"

}

}

}

EOTUploading your Versions

Create Version Shell within Private Registry Providers

curl -H "Authorization: Bearer ${TOKEN}"

-H "Content-Type: application/vnd.api+json"

--request POST

-d @-

"https://app.terraform.io/api/v2/organizations/${ORG_NAME}/registry-providers/private/${ORG_NAME}/${PROVIDER_NAME}/versions" <<EOT

{

"data": {

"type": "registry-provider-versions",

"attributes": {

"version": "${VERSION}",

"key-id": "${KEY_ID}",

"protocols": ["6.0"]

}

}

}

EOTThe response will contain upload links that you will use to upload the SHA256SUMS and SHA256.sig files.

"links": {

"shasums-upload": "https://archivist.terraform.io/v1/object/dmF1b64hd73ghd63",

"shasums-sig-upload": "https://archivist.terraform.io/v1/object/dmF1b37dj37dh33d"

}Upload Signatures.

# Replace ${VERSION} and ${PROVIDER_NAME} with actual values

curl -sS -T "dist/terraform-provider-${PROVIDER_NAME}_${VERSION}_SHA256SUMS" "${SHASUM_UPLOAD}"

curl -sS -T "dist/terraform-provider-${PROVIDER_NAME}_${VERSION}_SHA256SUMS.sig" "${SHASUM_SIG_UPLOAD}"Register Platform for every Architecture and Operating System.

FILENAME="terraform-provider-${PROVIDER_NAME}_${VERSION}_${OS}_${ARCH}.zip"

SHA=$(shasum -a 256 "dist/${FILENAME}" | awk '{print $1}' )

# OS ex. darwin/linux/windows# ARCH ex. arm/amd64# FILENAME. terraform-provider-<PROVIDER_NAME>_<VERSION>_<OS>_<ARCH>.zip. Define through name_template

curl -H "Authorization: Bearer ${TOKEN}"

-H "Content-Type: application/vnd.api+json"

--request POST

-d @-

"https://app.terraform.io/api/v2/organizations/${ORG_NAME}/registry-providers/private/${ORG_NAME}/${PROVIDER_NAME}/versions/${VERSION}/platforms" << EOT

{

"data": {

"type": "registry-provider-version-platforms",

"attributes": {

"shasum": "${SHA}",

"os": "${OS}",

"arch": "${ARCH}",

"filename": "${FILENAME}"

}

}

}

EOTThe response will contain upload the provider binary to:

"links": {

"provider-binary-upload": "https://archivist.terraform.io/v1/object/dmF1b45c367djh45nj78"

}Upload archived binaries

curl -sS -T "dist/${FILENAME}" "${PROVIDER_BINARY_URL}"Repeat step: Register Platform for every Architecture and Operating System and step: Upload Archived Binaries for every architecture and operating system.

Using the provider

Private providers hosted within Terraform Cloud are only available to users within the organization.

When developing locally, ensure you set up credentials through the terraform login, creating a credentials.tfrc.json file.

With the authentication bits setup, you can utilize the new provider by defining the provider block substituting in those existing variables.

terraform {

required_providers {

${PROVIDER_NAME} = {

source = "app.terraform.io/${ORG_NAME}/${PROVIDER_NAME}"

version = "${VERSION}"

}

}

}

provider "${PROVIDER_NAME}" {

# Configuration options

}Document Provider

For user consumption, a common practice is to provide provider documentation for your resources utilizing Terraform plugin docs. This plugin generator allows you to generate markdowns from examples and schema definitions, which users can then consume. At the time of publication, this feature is currently not supported within the Terraform Cloud. Please talk to your River Point Technology representative for alternative solutions.

Cleanup

To remove the provider from the registry:

Delete version

curl -H "Authorization: Bearer ${TOKEN}"

--request DELETE

"https://app.terraform.io/api/v2/organizations/${ORG_NAME}/registry-providers/private/${ORG_NAME}/${PROVIDER_NAME}/versions/${VERSION}"Delete provider

curl -H "Authorization: Bearer ${TOKEN}"

--request DELETE

"https://app.terraform.io/api/v2/organizations/${ORG_NAME}/registry-providers/private/${ORG_NAME}/${PROVIDER_NAME}"Deregister GPG Key

curl -H "Authorization: Bearer ${TOKEN}"

--request DELETE

https://app.terraform.io/api/registry/private/v2/gpg-keys/${ORG_NAME}/${KEY_ID}Conclusion

With a private registry, you get all the benefits of Terraform while still allowing internal consumption. This may be desirable when public providers don’t meet your use case and it comes with a host of benefits:

- More Customization and Control: A private registry allows organizations to maintain control over their proprietary or custom-built Terraform providers. It enables them to manage, version, and distribute these providers securely within the organization.

- Better Security and Compliance: A private registry ensures that only authorized users within the organization can access and utilize specific Terraform providers for sensitive or proprietary infrastructure configurations. This control aids in compliance with internal policies and regulatory requirements.

- Improved Versioning and Stability: With a private registry, teams can maintain a stable versioning system for their Terraform providers. This helps ensure project infrastructure configurations remain consistent and compatible with the specified provider versions.

Publishing custom Terraform providers to the Terraform Cloud private registry involves bundling, signing, and uploading binaries and metadata through the API. Following these steps, you can effectively manage and distribute your Terraform provider to support various architectures and operating systems.

River Point Technology (RPT) is here to guide you through the intricacies of exploring the dynamic cloud landscape. If you’re facing challenges and need assistance in achieving increased customization and oversight, enhanced security and compliance, along with improved versioning and stability, feel free to leave a comment or reach out to us directly.

As the HashiCorp Global Competency Partner of the Year and the only company certified in all three competencies—Security, Networking, and Infrastructure—we stand out as a market leader. Trusted by Fortune 500 companies, we serve as their guide in effectively navigating the dynamic cloud terrain. Contact RPT for guidance in optimizing the journey through the cloud landscape.